5 minutes ago, Max Power said:

Vector += VertexDeltaVector * [[ 0..1, X? / DistanceVertexToCorner ]]???

Taking the 1D case as example would be:

vertex1, position 4.3 and delta 0.6

vertex2, position 4.9 and delta 0.8

so vertex 1 would contribute to grid cell 4 with a weight of 0.7 and cell 5 with weight 0.3

and vertex 2 would contribute to grid cell 4 with a weight of 0.1 and cell 5 with weight 0.9

so we sum up in the grid cells both deltas multiplied by weight and just the weights seperately:

cell 4 = (0.6 * 0.7, 0.7) + (0.8 * 0.1, 0.1) = (0.5, 0.8)

cell 5 = (0.6 * 0.3, 0.3) + (0.8 * 0.9, 0.9) = (0.9, 1.2)

after all vertices have been injected we divide for each cell the sum of weighted deltas by the sum of weights to get the final deltas:

cell 4: 0.5 / 0.8 = 0.625

cell 5: 0.9 / 1.2 = 0.75

which is not far from the input deltas at those positions, so the math seems right. (I hope so at least  )

)

With more dimensions you just multiply the weights together (no euclidean distance to grid corner, but manhattan distance if you want).

Important: The above weighting is just a naive approach to deal with over/undersampling problem. There are other options, e.g. finding the closest grid corner for each vertex and snapping to this fo injection would also make sense because it avoids divisions by small weights. But for the boundary we reject reject small weights anyways so the above seems to work well.

I took my old code and refactored it a bit (likely i have some use for that too):

namespace Volume

{

template <typename T>

static void Inject (

std::vector<T> &volumeData, // weighted volume values data

std::vector<float> *volumeWeightAccum, // if given we try to average multiple samples per cell

const int dimX, const int dimY, const int dimZ, // volume dimensions

const vec position, const T value) // sample position (already scaled and transformd to volume space) and value

{

// calc integer grid indices and weight factors

float xf = position[0];

float yf = position[1];

float zf = position[2];

int xI = (int) xf; if (xI<0 || xI>=dimX-1) return;

int yI = (int) yf; if (yI<0 || yI>=dimY-1) return;

int zI = (int) zf; if (zI<0 || zI>=dimZ-1) return;

xf -= (float)xI;

yf -= (float)yI;

zf -= (float)zI;

float xg = 1 - xf;

float yg = 1 - yf;

float zg = 1 - zf;

// indices to 8 cells

int g = zI*(dimY*dimX) + yI*dimX + xI;

int g000 = g;

int g100 = g + 1;

int g010 = dimX + g;

int g110 = dimX + g + 1;

int g001 = dimY*dimX + g;

int g101 = dimY*dimX + g + 1;

int g011 = dimY*dimX + dimX + g;

int g111 = dimY*dimX + dimX + g + 1;

// inject weighted sample

volumeData[g000] += value * xg*yg*zg;

volumeData[g100] += value * xf*yg*zg;

volumeData[g010] += value * xg*yf*zg;

volumeData[g110] += value * xf*yf*zg;

volumeData[g001] += value * xg*yg*zf;

volumeData[g101] += value * xf*yg*zf;

volumeData[g011] += value * xg*yf*zf;

volumeData[g111] += value * xf*yf*zf;

if (volumeWeightAccum)

{

(*volumeWeightAccum)[g000] += xg*yg*zg;

(*volumeWeightAccum)[g100] += xf*yg*zg;

(*volumeWeightAccum)[g010] += xg*yf*zg;

(*volumeWeightAccum)[g110] += xf*yf*zg;

(*volumeWeightAccum)[g001] += xg*yg*zf;

(*volumeWeightAccum)[g101] += xf*yg*zf;

(*volumeWeightAccum)[g011] += xg*yf*zf;

(*volumeWeightAccum)[g111] += xf*yf*zf;

}

}

template <typename T>

static void NormalizeAndExtractBoundary (

std::vector< std::pair<int, T> > &boundary, // list of values to keep fixed <grind index, value>

std::vector<T> &volumeData,

const int dimX, const int dimY, const int dimZ,

const std::vector<float> &volumeWeightAccum,

const float minBoundaryWeight = 0.3f) // must be > 0

{

boundary.clear();

for (int i=0; i<dimX*dimY*dimZ; i++)

{

float auccumW = volumeWeightAccum[i];

if (auccumW >= minBoundaryWeight)

{

volumeData[i] *= 1/auccumW; // normalize

boundary.push_back(std::make_pair(i, volumeData[i])); // store as boundary cell

}

}

//// add the boundary of the volume as well as zeroes // nope - better handle this in the solver automatically by simply skipping volume boundary

//for (int x=0; x<dimX; x+=dimX-1)

//for (int y=0; y<dimY; y+=dimY-1)

//for (int z=0; z<dimZ; z+=dimZ-1)

//{

// int g = z*(dimY*dimX) + y*dimX + x;

// boundary.push_back(std::make_pair(i, T(0)));

//}

}

template <typename T>

static void SolveForHarmonicMap (

std::vector<T> &volumeData,

const std::vector< std::pair<int, T> > &boundary,

const int dimX, const int dimY, const int dimZ,

const int maxIterations)

{

// todo: very slow! Downsample volume, solve, upsample and start the full res solve with the upsampled guess; OpenCL?

std::vector<T> temp;

temp.resize(volumeData.size(), T(0));

for (int iter = 0; iter < maxIterations; iter++)

{

for (int x=1; x<dimX-1; x++)

for (int y=1; y<dimY-1; y++)

for (int z=1; z<dimZ-1; z++)

{

T valueSum(0);

//float weightSum = 0;

int g = z*(dimY*dimX) + y*dimX + x;

for (int u=-1; u<=1; u++)

for (int v=-1; v<=1; v++)

for (int w=-1; w<=1; w++)

{

if (!u&&!v&&!w) continue;

int ng = g + w*(dimY*dimX) + v*dimX + u;

float weight = (u ? 1 : 2) * (v ? 1 : 2) * (w ? 1 : 2);

//if (!iter&&x==1&&y==1&&z==1) SystemTools::Log("weight %f\n", weight);

//weightSum += weight;

valueSum += volumeData[ng] * weight;

}

//if (!iter&&x==1&&y==1&&z==1) SystemTools::Log("weightSum %f\n\n", weightSum);

//valueSum *= 1 / weightSum;

valueSum *= 1.f / 56.f;

temp[g] = valueSum;

}

// fix boundary

for (int i=0; i<boundary.size(); i++)

{

temp[boundary[i].first] = boundary[i].second;

}

// todo: break if difference between temp and volumeData is small enough, likely make this check every 8 iterations or so

volumeData = temp;

}

}

template <typename T>

static T Sample (

const vec position,

std::vector<T> &volumeData,

const int dimX, const int dimY, const int dimZ

)

{

// calc integer grid indices and weight factors

float xf = position[0];

float yf = position[1];

float zf = position[2];

int xI = (int) xf; if (xI<0 || xI>=dimX-1) return T(0);

int yI = (int) yf; if (yI<0 || yI>=dimY-1) return T(0);

int zI = (int) zf; if (zI<0 || zI>=dimZ-1) return T(0);

xf -= (float)xI;

yf -= (float)yI;

zf -= (float)zI;

float xg = 1 - xf;

float yg = 1 - yf;

float zg = 1 - zf;

// indices to 8 cells

int g = zI*(dimY*dimX) + yI*dimX + xI;

int g000 = g;

int g100 = g + 1;

int g010 = dimX + g;

int g110 = dimX + g + 1;

int g001 = dimY*dimX + g;

int g101 = dimY*dimX + g + 1;

int g011 = dimY*dimX + dimX + g;

int g111 = dimY*dimX + dimX + g + 1;

T sample (0);

sample += volumeData[g000] * xg*yg*zg;

sample += volumeData[g100] * xf*yg*zg;

sample += volumeData[g010] * xg*yf*zg;

sample += volumeData[g110] * xf*yf*zg;

sample += volumeData[g001] * xg*yg*zf;

sample += volumeData[g101] * xf*yg*zf;

sample += volumeData[g011] * xg*yf*zf;

sample += volumeData[g111] * xf*yf*zf;

return sample;

}

};

I have tested it with this:

static bool visVolume = 1; ImGui::Checkbox("visVolume", &visVolume);

if (visVolume)

{

static int init = 1;

static HEMesh mesh;

static std::vector<vec> volumeData;

static std::vector< std::pair<int, vec> > boundary;

const int dim = 32;

static int solverIterationsCount = 0;

if (init || ImGui::Button("Reload"))

{

//((HEMesh_Serialization&)mesh).LoadMesh ("C:\\dev\\pengII\\temp\\template_mesh.mesh");

((HEMesh_Serialization&)mesh).LoadMesh ("C:\\dev\\pengII\\mod\\bunny closed.MTC10.000000.hem");

// transorm mesh vertices to fit into volume (mesh is centered and has a size of about 20)

for (int i=0; i<mesh.GetVertexCount(); i++)

{

vec p = mesh.GetVertexPos(i);

p *= float (dim) / 10.f;

p += vec(float (dim/2));

mesh.SetVertexPos(i, p);

}

volumeData.clear();

volumeData.resize(dim*dim*dim, vec(0)); // important to init with zeroes

std::vector<float> volumeWeightAccum;

volumeWeightAccum.resize(dim*dim*dim, 0);

// inject vertices, using their normal as example delta

for (int i=0; i<mesh.GetVertexCount(); i++)

{

vec p = mesh.GetVertexPos(i);

vec delta = mesh.CalcVertexNormal(i) * -float(dim)/10; // normals point inwards

Volume::Inject (volumeData, &volumeWeightAccum,

dim,dim,dim, p, delta);

}

Volume::NormalizeAndExtractBoundary (boundary, volumeData, dim,dim,dim,

volumeWeightAccum);

solverIterationsCount = 0;

//Volume::SolveForHarmonicMap (volumeData, boundary, dim,dim,dim, dim*4); // dim*4 seems a good value in any case it seems

//std::vector< std::pair<int, vec> > emptyBoundary;

//Volume::SolveForHarmonicMap (volumeData, emptyBoundary, dim,dim,dim, 3); // without boundary this acts as of smoothing

}

static bool solvePerFrame = 0; ImGui::Checkbox("solvePerFrame", &solvePerFrame);

if (solvePerFrame)

{

Volume::SolveForHarmonicMap (volumeData, boundary, dim,dim,dim, 1);

solverIterationsCount++;

}

ImGui::Text("solverIterationsCount %i", solverIterationsCount);

static bool visTemplate = 0; ImGui::Checkbox("visTemplate", &visTemplate);

if (visTemplate) ((HEMesh_Vis&)mesh).RenderMesh(0, false);

static bool visDeformed = 0; ImGui::Checkbox("visDeformed", &visDeformed);

if (visDeformed)

{

for (int i=0; i<mesh.GetVertexCount(); i++)

{

vec p = mesh.GetVertexPos(i);

vec delta = Volume::Sample(p, volumeData, dim,dim,dim);

p += delta;

RenderPoint(p, 1,1,1);

}

}

static bool visSlice = 1; ImGui::Checkbox("visSlice", &visSlice);

if (visSlice)

{

float z=dim/2;

for (float x=0; x<=dim; x+=0.5f)

for (float y=0; y<=dim; y+=0.5f)

{

vec p(x,y,z);

vec delta = Volume::Sample(p, volumeData, dim,dim,dim);

RenderArrow(p, delta, 0.1f, 1,1,1);

//RenderPoint(p, 0.1f, 1,1,1);

}

}

init = 0;

}

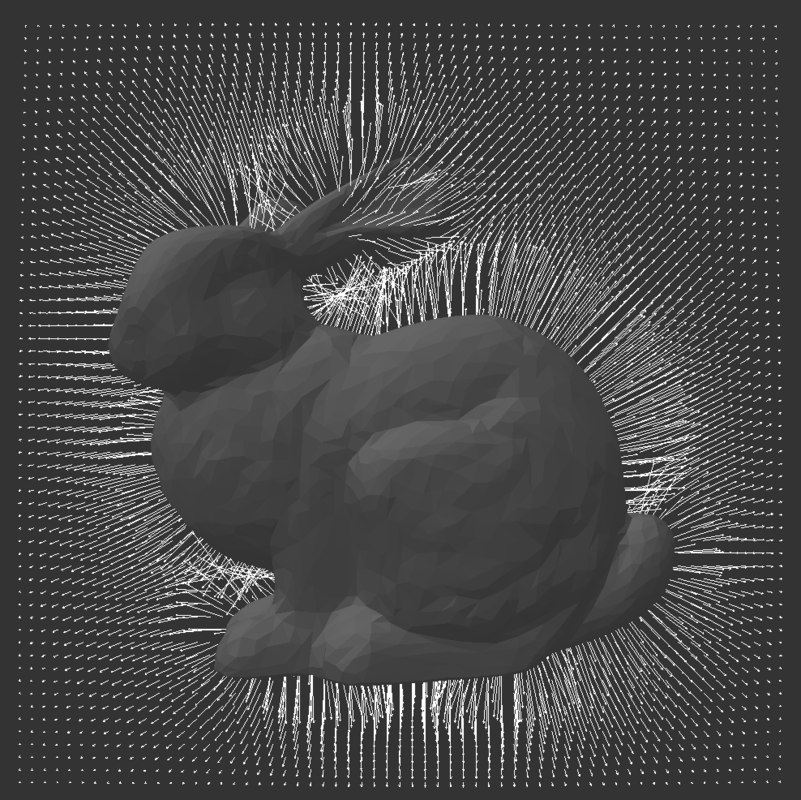

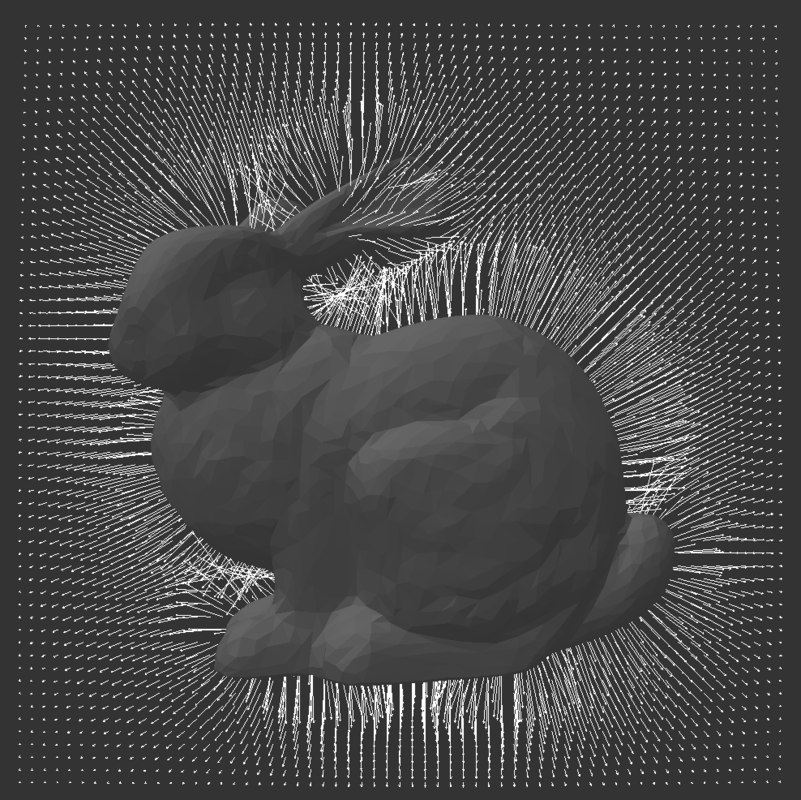

And i get this result (using normals as deltas):

The distance from the model to the volume boundary affects the results.

If it's too small, the deltas shrink too quickly when cloth is far from the skin.

If it's too large the solver needs more runtime.