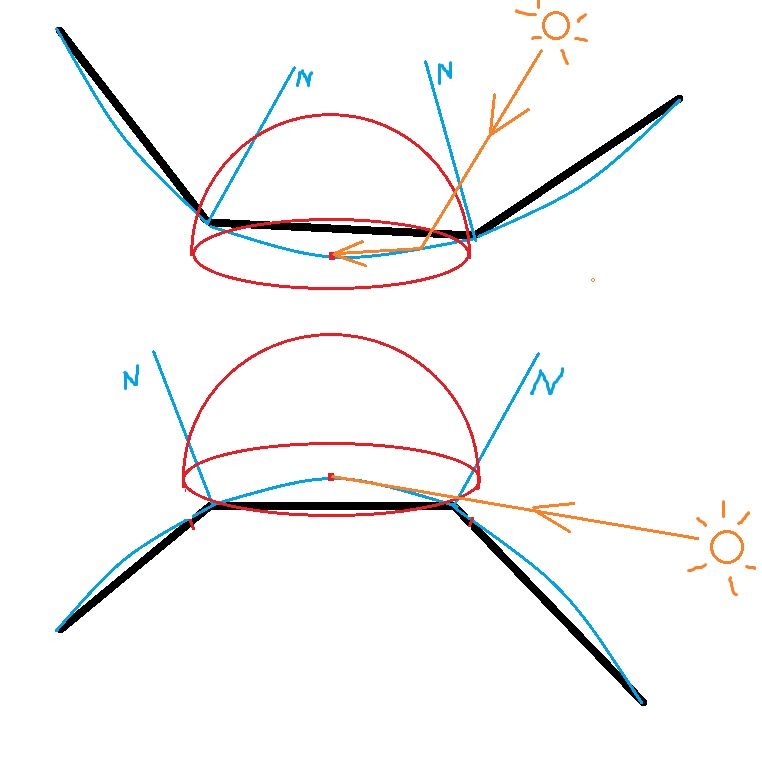

Is there a problem with the widely adopted approach for lighting, when using the interpolated normals of the smoothed meshes?

Upper case - Shouldn't it self-intersect with its own triangle?

Lower case - Can't it happen that light rays come from outside the hemisphere?

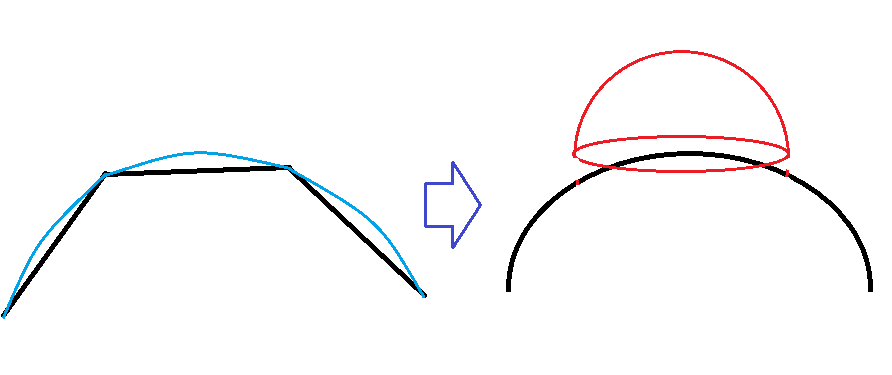

The XYZ of the red dot actually lies on the black line(surface), but for computation, I assume its 3D position is displaced toward the interpolated normal to alleviate this effect:

https://computergraphics.stackexchange.com/questions/4986/ray-tracing-shadows-the-shadow-line-artifact

🎉 Celebrating 25 Years of GameDev.net! 🎉

Not many can claim 25 years on the Internet! Join us in celebrating this milestone. Learn more about our history, and thank you for being a part of our community!

Hemisphere used for lighting and heavily smoothed meshes

I'm not sure I'd call it a problem. Of course Phong shading is only an approximation. Ray tracing is better but there is a heavy performance cost, and even that's not perfect. I guess it's becoming more viable now. Is there a specific issue you are trying to resolve? For real-time rendering you typically make some compromises.

6 hours ago, NikiTo said:Lower case - Can't it happen that light rays come from outside the hemisphere?

The light that comes from below the normal plane hits the back side of the sample point and so does not contribute.

Even if you care for translucent media like skin those backside rays would not collide and just pass the object.

6 hours ago, NikiTo said:Upper case - Shouldn't it self-intersect with its own triangle?

We could say those rays are shadowed and the effect is intended. The geometry occludes itself, which is something baked ambient occlusion would try to capture.

Displacing the sample position to the blue line is an interesting idea, but if it causes more issues than improvements maybe just interpolating the normal as usual works better.

You can imagine to zoom in to the sample point. Then the blue line becomes more and more straight. (The blue line is piecewise linear, and as a result we can treat it as straight for a Infinitesimal small region.) If you see it this way, the doubts and confusion about hemisphere model should disappear. The remaining error and artifacts are a result of discretizing smooth geometry with meshes and likely you can not solve this completely by trying to reconstruct the smooth surface as you seem to do.

Ok, guys, i infinitely tessellated the geometry and with an infinitely small sphere it seems to work:

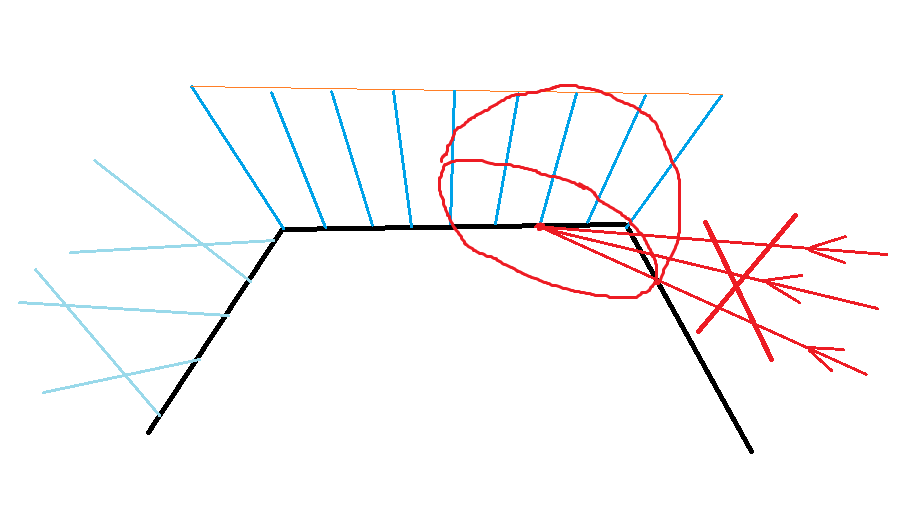

Still i need to account for self intersection:

(it looks ugly, i can not rotate ellipses in Paint)

And even worse for normal mapping on the left side.

But if i test for self intersection, i can get shadow acne.

So i will just cut a piece from the hemisphere at the time i generate new rays. The hemisphere that goes below/behind its own triangle will be cut off. Then i can keep testing for intersections without worrying about its own triangle.

I will think about translucent materials when the moment to use opacity comes...

6 hours ago, NikiTo said:So i will just cut a piece from the hemisphere at the time i generate new rays.

You could do that easily by rejecting all rays behind the triangle normal.

But i wonder if this causes visible artifacts, and i don't know what's common practice here. Anybody?

My own toy raytracer only uses triangle normals - no normal maps or interpolated vertex normals.

So i'm curious. Keep us updated if you have some renders... ![]()

Having light rays below the hemisphere is one thing... But this situation can also cause the viewing ray to be below the horizon (so N dot V is negative). Some BRDFs give crazy results in that case, so you have to clamp it to zero... and if you clamp it to zero, you can end up with a weird edge area with flat/cut-off looking shading.

(FWIW, both light rays and view rays should be of equal importance because of Helmholtz reciprocity -- swapping L and V in a BRDF should give identical results -- but in practice I've noticed some differences)

I'm interested in workarounds too ?

Simple solution is to just try not to use such low-poly meshes such that the vertex normal vs triangle normal can be extremely different... Also, if you've baked normal maps from a very high poly mesh onto a low poly one, then the normal map will somewhat correct for this issue on the low poly mesh (but at the same time, may also cause the problem...)

On 5/18/2019 at 4:53 PM, JoeJ said:The light that comes from below the normal plane hits the back side of the sample point and so does not contribute

If you do this for viewing rays, you end up with ugly black regions that show up around mesh silhouettes... And if you do it for light rays but not view rays, you've violated Helmholtz reciprocity (fine for a hacky renderer, not for a film quality offline one).

hmmm... One idea would be to lerp between face normal in interpolated normal with face normal dot product, so at shallow angles expose low poly mesh but prevent artifacts. I guess this works fine for N dot L, because at silhouettes the real mesh becomes visible anyways. Curious how this would look for integrated lighting over the hemisphere...