Hi, First post here. I'm an experienced C++ programmer but I've never used a game engine before. A few years back I wrote some procedural planet code that used DirectX 9. It worked pretty well but it was restricted to height maps. However it did support truly huge worlds, and let me walk around on them. I've now updated it to use octrees of prism shaped voxels so I can do caves and stuff using something akin to marching cubes, but I haven't updated the graphics in a long time and I would like to use a game engine instead of Direct X if possible. I use fractals to generate terrain in real time so I don't really have to worry about loading stuff off of disk. However I do have to do some special things to handle the lack of double-precision on the graphics card. I generate terrain chunks in double and then when I send them to the graphics card, I convert to single precision and transform them to their final location. I guess I'm kind of moving the terrain around the player rather than the other way around. The player is always close to (0,0,0) That works pretty well since the LOD code is sophisticated enough to reduce terrain detail appropriately. I do the collision myself in a separate thread with a separate world model that just exists around the player in order to avoid race conditions with the LOD. So I guess I don't really need collision code so much, however it might be nice to use real game engine collision code which is probably faster than my clunky code. All I really need is sphere mesh collision right now...... So my question is are there any game engines that will handle this sort of thing, or at least not get in the way, or should I go back to using Direct X?

🎉 Celebrating 25 Years of GameDev.net! 🎉

Not many can claim 25 years on the Internet! Join us in celebrating this milestone. Learn more about our history, and thank you for being a part of our community!

Is there any game engine that supports a large world model?

4 hours ago, Gnollrunner said:I'm an experienced C++ programmer

Then I will recommend the Unreal Engine. The engine is build for large open world AAA games, it has level streaming, amazing instance system, huge amount of open world tools and the best terrain tools I have ever worked with.

The only problem with Unreal is that it isn't very beginner friendly, but you don't look like a beginner. It also takes a while to get use to how the engine works.

A game engine per-se isn't supporting or not supporting huge/endless worlds. This is a question of good support for chunking your world into pieces that one may also do by hand. I did implement such a system into Unity for our 1600 km^2 large world map by simply splitting the world into different scenes that were loaded/unloaded by a quad tree based management class

First thanks for the replies guys. What I'm worried about is the way I'm doing things may be somewhat alien to the way they are done in a game engine. First off currently I actually have no terrain data stored anywhere on disk. There are only about 20 or so parameters that control the terrain generation which are hard coded into the program (although they could be read from a file). When the program executes it runs the fractal function(s) for the corners of voxels and generates triangle data which gets combined into meshes. The number and size of chunks change as you move around, so the program keeps running the fractal functions to generate new terrain and it also throws out old terrain. Chunks twice as far away will be twice as large but the voxels will also be twice as big, so the data size remains roughly the same per chunk. There are two meshes for each chunk, a core mesh and a border mesh. The border mesh is built by special routines that generate data to transition from lower resolution to higher resolution chunks. This way if a chunk remains the same from one iteration to the next, but it's neighbor changes, I only have to recalculate and send down the border of that chunk.

So in the end what I get is a bunch of meshes that are stored on the CPU side and every iteration I need to send to the card any new meshes, and delete any meshes which I no longer need. The planet can actually very large (like thousands of kilometers in diameter) because you only need to generate chunks for the part you see. As I said the rest of the data doesn't exist anywhere when it's not in use.

All this stuff is already implemented. What I'm, a bit worried about is that I'm already holding mesh data in my own format on the CPU side. If I use DirectX I can send it straight to GPU memory. If I send it to a game engine though some sort of interface, I'm guessing it might store it internally in it's own format so then I would have an extra copy of the data ? I guess what I would like is a game engine with an interface that somehow lets you write directly to the graphics card. Then I could use that for the terrain, and use it's built in features for the rest of the assets.

7 hours ago, Shaarigan said:map by simply splitting the world into different scenes that were loaded/unloaded by a quad tree based management class

That is the correct way to start with open world games, this is known as Level Streaming. Unity now has better level streaming but it is still very weak compared to Unreal.

The problem is that it is just the start. Unity's whole rendering pipeline is out of date; it is fantastic for story driven small world games, but horrible in every way for open world games.

A simple comparison would just be to Google "Open World Unity" and "Open World Unreal" just to see for yourself what the average Unity terrain looks like compared to the average Unreal terrain.

4 hours ago, Gnollrunner said:the way I'm doing things may be somewhat alien

It really isn't. This is exactly how Unreal's terrain works, except it doesn't allow for cavities as they are bad for performance, Unreal a voxel like data under the terrain (A invisible point cloud) and skins over this to produce it's mesh.

The result is it has tools like Gizmos that allow copy and editing of this data to create new data and it has a fantastic terrain LOD system that doesn't deform so much when you move away.

Where with the older height map texture only approach copying data depended on the pixels and things, like rotating part of the terrain, produced lower quality.

A other nice thing is that the point cloud data is stored as a RAW file, so it can be opened in ZBrush and other 3D software for quick tweaking.

4 hours ago, Gnollrunner said:so the data size remains roughly the same per chunk

Yes, octrees and many other data forms also work like this. They all have the same problem and that is the loss of data.

So say you have 1024 data points, and at double the view range it looses 50% of the data. That means: 1024-> 512 -> 256 -> 128 -> 64 -> 32 -> 16 -> 8 -> 4 -> 2 -> 1. That means you can only travel 11 times the starting range before the data is so small it is just a giant blob.

You can see this happening in the above image with the white mesh having small details and the purple using large meshes. The above terrain is also gigantic, you will see a kind of grid on the white; each of those is at least a 10 48576 polygon zone. The terrain in that image is around 4 million polygons.

Meaning that the terrain above is using +/- 60% of the computer resources just to render.

QuoteIt really isn't. This is exactly how Unreal's terrain works, except it doesn't allow for cavities as they are bad for performance, Unreal a voxel like data under the terrain (A invisible point cloud) and skins over this to produce it's mesh

I'm not sure that I understand. My impression of Unreal is that the data is still stored on disk, although it may have been generated procedurally at some point . Does Unity actually support run time procedural planet sized worlds? When I google it I see a lot of procedural plugins that generate terrain, but nothing that uses procedures to generate terrain while the game is running. That's kind of a different animal, and as far as I know it's the only way to get truly large world without using an impossible amount of disk space.

Also I don't understand why it would use voxels if it didn't support cavities. As far as I know that's the main reason you would used voxels in the first place along with destructible terrain.

QuoteYes, octrees and many other data forms also work like this. They all have the same problem and that is the loss of data.

I think we are talking about different things. I don't use any stored data so there is no loss. It's all just a function. It's continuous over the entire world. The octrees of voxels are only used to generate meshes from the functions, based on the distance a chunk is away from the player. they are constantly regenerated as the player moves so they will always be reasonably detailed in respect to the distance from the camera.

I think I'll probably just have to download some game engines and start playing with them to get a feel for the capabilities.

1 hour ago, Gnollrunner said:Does Unity actually support run time procedural planet sized worlds?

OK, this just turned into a rant about Unity. So instead I will summarize it. Unity, out of the box, does not support large open worlds. You need a very good programmer.

However, this is why people who enjoy making there own terrain tools use Unity; because it is small and you aren't conflicting with already existing terrain tools and it does the rendering part for you. (You can just ignore what terrain code Unity has, it's terrain tools suck, SUCK!)

1 hour ago, Gnollrunner said:Also I don't understand why it would use voxels if it didn't support cavities.

Because cavities are slow. They have complex light and shadow calculations, complex physics that is often simulated with lots of small collision shapes. Making a cave model in 3D software and importing it will always look better and operate better.

The only reason ever to allow cavities, is for destructible terrain.

2 hours ago, Gnollrunner said:that the data is still stored on disk

To make infinity you need infinity. Nothing is without cost. These are the two laws of creation.

What it means is we can't store 2 numbers in 1 without a way to extract them. So to save data you do work. The amount of work has to be equal or larger than the disk space you used to keep quality.

The reason we save data is to store the work we already did so we don't need to do it again.

2 hours ago, Gnollrunner said:I don't use any stored data so there is no loss.

9 hours ago, Gnollrunner said:Chunks twice as far away will be twice as large but the voxels will also be twice as big

This is the loss I am talking about, the loss of quality.

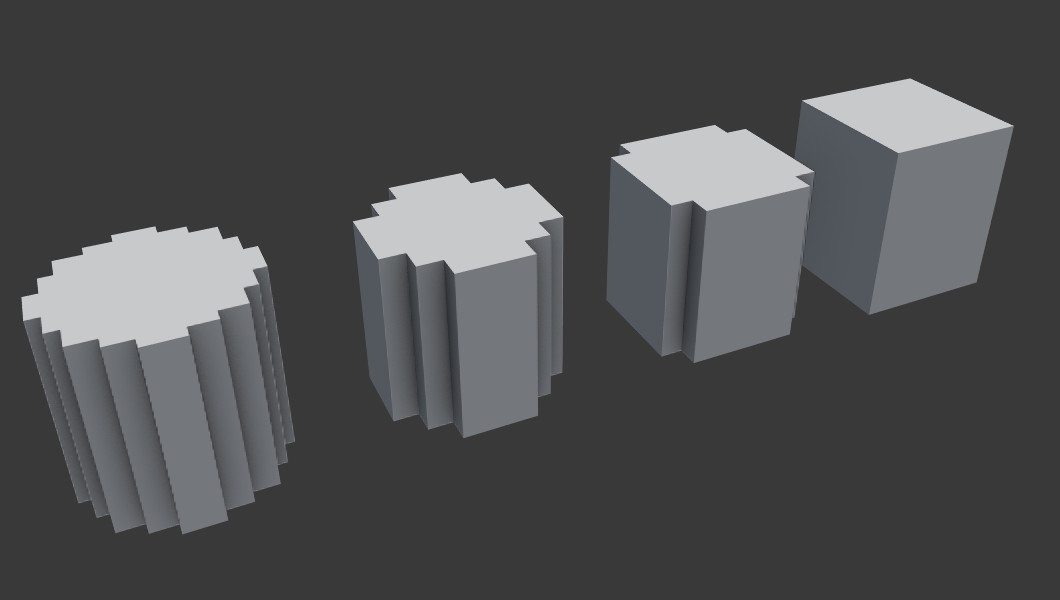

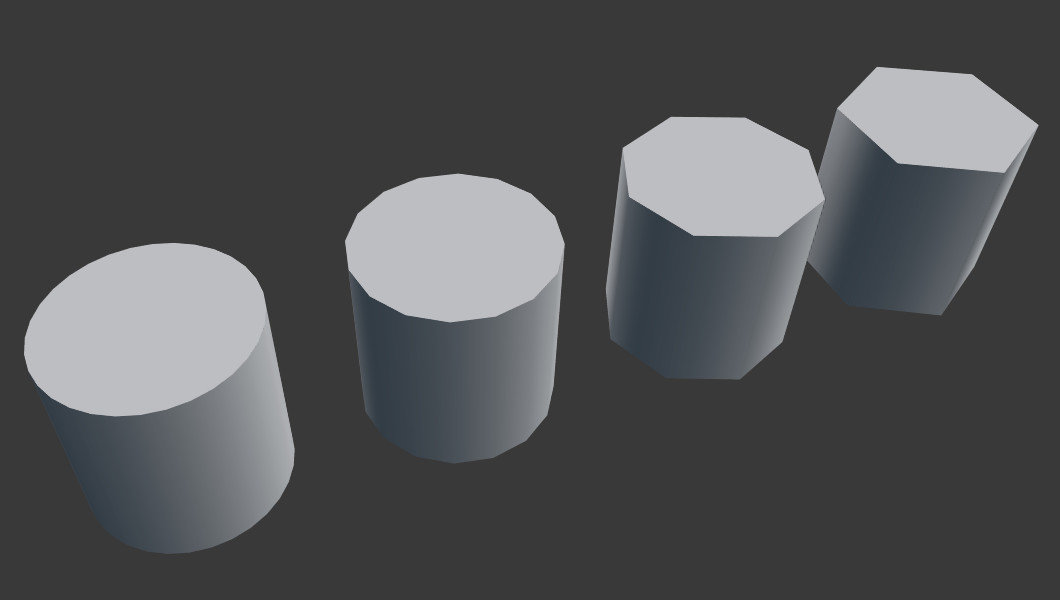

See the shape looses quality and clarity as you move away, this is Blender's Octree. The reverse is also true, the closer you get the more and more blocks have to be made and rendered. Switching between this data is much more noticeable than "polygon pop" from LOD managers.

Even marching cubes, the best voxel to mesh system, suffers from the loss of detail problem.

You aren't the first person to think of this: https://www.youtube.com/watch?v=00gAbgBu8R4 Notice how they avoid looking into the distance and moving. Because they don't want the viewer to see the ugly "pop" from the voxels. Also they run at around 20-30 FPS.

The problem with such a system is that you keep track of tons and tons of pointless data. Either by storing it in ram, disk or by calculating it. Where the methods used now only calculates what is needed, the result is this: https://www.youtube.com/watch?v=gM6QkPsA2ds

Unreal only generates small details when you are near a object, but it gets it as a 2D data list from the texture. This saves all that time in calculating details from nothing.

OK a few things. Yes there is a loss of quality when the voxels are bigger however the voxels are only larger when they are far away so that's generally what you want. It's the same as LOD.

Also I'm not claiming to have invented run time procedural terrain, this is just my implementation. However I don't think I'm doing the same thing as in the video you posted. I'm still using triangular meshes like everyone else. They are just generated at run time from functions and not stored on disk. There are no point clouds or anything like that. I'm not even familiar with them.

My main goal is actually not to have better graphics but a larger world with small disk usage. I want to have an MMO with all players on a single shard and a lot of area to explore and you need a lot of terrain for that. I also don't want unnatural zone boundaries. In fact there are really no zones at all, at least not to the voxel code. It's just continuous terrain.

As for collision, for my purposes I really only need sphere to mesh collisions and by stretching the data I can also do elapses to mesh collisions also. At least in my code cavities make no difference. It's just triangles and the sphere slide off of them in the same way. Lighting is a different matter but then again I'm not aiming for ultimate graphics. I would rather support caves that go deep underground and stuff like that, which is easy to do with voxels.

QuoteThe reason we save data is to store the work we already did so we don't need to do it again.

True but then loading off of disk is slow and you have to figure out when to load. and how much to load etc. It's not obvious to me that it's better. Fractal terrain is seamless and the fractal functions run surprisingly fast. What works for me is to use one or more threads to generate the next iteration of the geometry while another thread runs the game. When the new version of the world is ready, I just swap the new chain of meshes in, or in my next version I'm going to fade them in and fade the old ones out. Even if it takes a few seconds to generate the next iteration it doesn't matter because the player can only move so far in a few seconds.

2 hours ago, Gnollrunner said:Also I'm not claiming to have invented run time procedural terrain...

Sorry, that wasn't what I meant. I meant that you don't need to start from scratch. Many people work on similar ideas and have content to share.

What you are looking for is known as Marching Cubes. Basically you have voxel data that a mesh is drawn over. There was a good tutorial here on gamedev explaining how to make it and had samples.

2 hours ago, Gnollrunner said:Yes there is a loss of quality when the voxels are bigger however...

It gets much bigger faster than you would expect.

For example, a moon and planets would be cubes. Any map chunk six or more chunks away has to be almost flat. The result is that the terrain has this collapsing effect.

The reason is rendering power, even engines like Unreal average 8 000 000 poly and Unity 6 000 000 polygons. A simple block takes at least 12 polygons.

Meaning that even with Unreal you get around 665 000 blocks to draw your whole world. That is more or less the same as the Unreal terrain.

I like your idea, I am just pointing out that most game engines already use octrees and such. Yet they still have limited world sizes.

QuoteWhat you are looking for is known as Marching Cubes.

Yes it's Marching cubes but rather marching prisms in my case. It also has a lot of extra stuff in certain cases to get rid of ambiguities and also for doing transitions from high resolution voxels to lower resolution voxels. In addition each prism is a node in an octree. There is no big 3D array. I thought of using duel contouring but I didn't like the fact that it can create non-manifold geometry and also triangles in the mesh naturally extend outside the cells, so I can't use my octree for collision.

QuoteIt gets much bigger faster than you would expect.

Well in my case voxels stay roughly the same size relative to the camera. This worked well even in my old version. It hasn't really been an issue. I didn't notice any of these problems you are describing. Also I don't draw in blocks. It's normal terrain like you would generate with marching cubes. Sometimes people think of Minecraft when they hear the word voxel but that's just a subset of what you can do with it. Since there is a horizon I don't need to render so far out. If you fly upwards you can of course see more of the planet however in that case the LOD starts to take effect in the altitude direction so it again reduces the data size.